When ChatGPT first launched, it seemed like the whole internet was talking about it. Now that the world has had time to see the risks and rewards for themselves, pharma must decide whether to take it or leave it

Words by Jade Williams

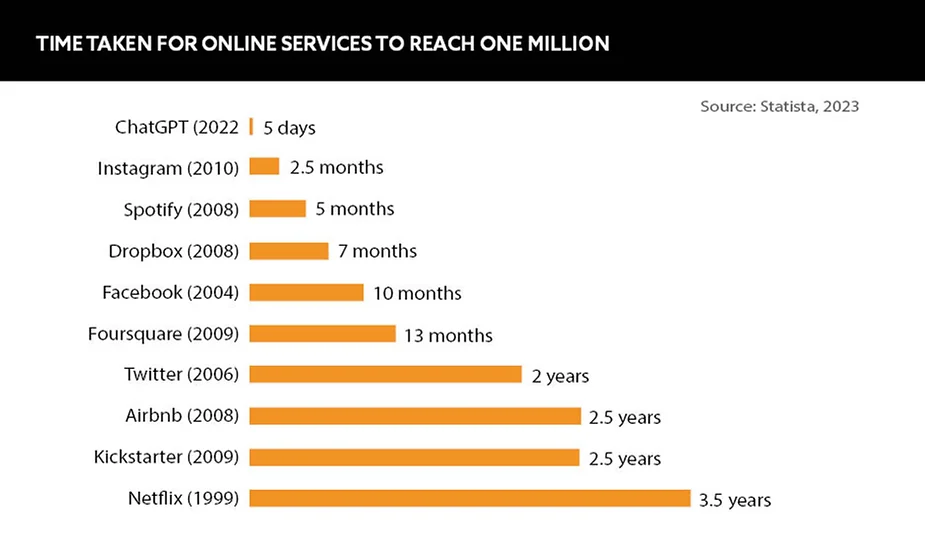

ChatGPT became wildly popular the world over in a matter of days. With the potential to automate and improve customer service, drive sales and increase brand awareness, the tool is being investigated by companies in all sectors as a marketing shortcut that could potentially save time, money and resources.

However, as is well known, artificial intelligence is only ever as good as the data it’s based on, and there are several grey areas and red flags that suggest ChatGPT may not be all it’s cracked up to be. Regulation, disinformation and potential patient harm – to name but a few. Given the drawbacks, should the pharmaceutical industry incorporate the software into its marketing activities?

Fame to fad

When it became free and open to use in late 2022, ChatGPT was rapidly adopted. Julia Walsh, CEO, Brand Medicine International, comments that the software is incredibly attractive to a variety of stakeholders as it “takes the work out of search by consolidating information in a single response that is easy to understand”. As a result, she adds, users no longer have to click on multiple links and trawl through different websites to find the answers they need. From advising on meal preparation to explaining the concept of programming to a five-year-old, there seems to be nothing this model cannot do.

ChatGPT doesn’t cite where it gets its information from

All that glitters is not gold, however, and while ChatGPT wants to be a hot new alternative to Google, the search results can be filled with misinformation. The software is undeniably advanced, but as Darshan Kulkarni, Principal Attorney, The Kulkarni Law Firm, who focuses on regulatory and compliance for life sciences companies, states: “ChatGPT doesn’t cite where it gets its information from, it just creates an amalgamation of multiple sites and spits out an answer.” In an industry as vital to world health as pharma, can the industry really afford to risk patient health for the sake of trying a new software?

Misinformation mayhem

Since its launch, companies such as Google and Microsoft have been trialing ChatGPT in their own business structures. However, almost immediately after launch, people began to find discrepancies in the information. When Microsoft unveiled its new ChatGPT-powered Bing search capabilities, users almost instantly stumbled upon incorrect answers to queries and even found the engine suggesting conspiracy theories. A similar fate hit Bard, Google’s ChatGPT chatbot, when users spotted an error in its advertisement relating to the James Webb telescope – which slashed $100bn off the company’s share price.

If these already technologically savvy companies, known for their excellent software and programming skills, can’t get ChatGPT right, how can the pharma industry expect to do better? “ChatGPT has no respect for the truth,” asserts Paul Simms, CEO, Impatient Health. “It’s designed to be accessible and intelligent but not necessarily correct.” Because the software gathers its answers to user queries from all over the web, the responses it provides may not be factually correct, but rather the most popular result it finds.

Is it right for pharma?

If pharma companies were to use ChatGPT-powered chatbots in their marketing models, for example in a product launch aimed at healthcare professionals, users might want to ask the bot questions about the drug or therapeutic to which the programme may not know the answer. In this situation, would the software inform the user that it did not know the answer? Or would it provide misinformation that could potentially damage the launch?

We must teach ourselves to be wary of relying on the accuracy of information

The issue grows even further when considering post-launch usage. Upon considering prescribing a patient with a new medication, an HCP could turn to the chatbot to research potential comorbidities or side effects related to the medication, trusting the software as it is embedded within the manufacturer’s own site.

Walsh supports these concerns by noting that “in many markets, content about prescription medications is not allowed to be published online, so the data scraped by the tool to generate answers will be heavily influenced by content on Wikipedia or Reddit as well as prominent sites like drugs.com”. Sites like these often use unverified and individual patient reviews to compare therapies, rather than clinical data.

While the programme has risks and rewards for HCPs looking for an answer to a question about a medicine or a health condition, the risk to patients could be far greater. “Its conversational style and personalised answers, which are delivered in a reassuring tone, are poised to connect emotionally with patients and carers.” This could make patients more susceptible to misinformation when using the software compared to traditional search mechanisms such as Google or social media.

Proceed with caution

So, what does the growing popularity of AI search engines like ChatGPT mean for pharma? Change is on the horizon, whether the industry is ready or not, and the consensus seems to be that ChatGPT may have great power in shaping it. As a result, it may be beneficial for responses provided by the software that contain health information to be accompanied by a health recommendation that directs the user to seek advice from an industry-approved website or HCP.

However, Simms still believes that ChatGPT will become “recognised for misinformation” and that it “should be kept away from healthcare due to its flaws”. He urges pharma, healthcare professionals and patients to be wary of the dangers of the tool. “Just as we know that a model’s Instagram feed rarely represents ‘real life’, we need to teach ourselves to be cautious about relying on the accuracy of information,” he says. Whatever the future holds for the use of ChatGPT in pharma, the industry must keep a close eye on how it is used to protect patients.