Author: Laith Gergi, EMJ, London, UK

Citation: EMJ. 2024;9[3]:10-13. https://doi.org/10.33590/emj/PFXG2411.

![]()

THE RESIDENT AND RESEARCH FELLOW SECTION PERSPECTIVE

Wurm opened the session with a presentation focusing on the perspective of neurology trainees and research fellows at the beginning of their careers on navigating the evolving medical landscape marked by the early adoption of AI in clinical practice. Wurm began by asking: “What will AI integration in clinical neurology look like” to AI? ChatGPT 3.5 (OpenAI, San Francisco, California, USA) identified how AI is poised to enhance the analysis of medical data; contribute to personalised treatment options; identify medication contraindications; and improve diagnostic accuracy, particularly in neuroimaging modalities like MRI and CT.

Wurm acknowledged these advancements but highlighted a significant gap in the integration of AI into medical education. He proposed that AI could be leveraged to link healthcare records with training resources, providing immediate access to relevant information for medical students and residents. Furthermore, AI-powered virtual patient interactions could simulate complex clinical scenarios, offering students tailored educational experiences that develop both technical and soft skills.

Wurm highlighted the patient-facing opportunities of AI in clinical neurology. Start-ups are outpacing public health systems and academia in developing chatbots for diagnosis and triage. In Europe, there are already ten health chatbots that patients are beginning to access, answering questions similarly to healthcare professionals. The first chatbot that can mimic a therapeutic intervention has received Class IIa UKCA medical device certification and has been approved for medical use in the UK.1 The Limbic Access AI chatbot (Limbric, London, UK) is a mental health app that uses machine learning to identify eight common mental health disorders and triage patients seeking psychological support from NHS’s Talking Therapies services.1 Limbic Access is used by 25% of people accessing the National Health Service (NHS) Talking Therapies services and has saved an estimated 30,000 clinical hours.1 Wurm indicated that similar chatbots could be applied in neurology to alleviate symptoms in non-emergency situations when accessing an expert is challenging. However, any software at the point of contact for patients requires regulation to ensure that the information is accurate and if human intervention is required, it can be quickly identified.

The concluding remarks by Wurm emphasised the unique perspective of young neurologists at the forefront of AI application. There is significant potential to leverage the power of AI to improve medical education and augment the delivery of patient care, addressing tasks potentially overwhelming human capabilities. Similar to how people feel comfortable relying on autopilot when flying, Wurm hopes that we can reach a point where AI in healthcare is readily accepted. Nevertheless, he acknowledged the importance of regulating AI in medical use to ensure accuracy, data privacy, and equitable access in all regions of Europe and the wider globe because, currently, access to these technologies carries a significant cost.

NAVIGATING THE ETHICAL LANDSCAPE: IMPLICATIONS OF AI IN THE REALM OF NEURO-DATA

Marcello Ienca, College of Humanities at École polytechnique fédérale de Lausanne, Switzerland, presented on the ethical implications of AI in clinical neurology. He began by challenging the misconception that ethics is merely a discipline tasked with policing medicine and serving as a regulatory hurdle. Part of this misconception comes from the primary goal of ethics to prevent harm but fails to consider the other side of the coin, which is the promotion of good. Instead, Ienca framed ethics as a discipline focused on maximising human wellbeing, ensuring that technological innovation is designed and developed with this in mind.

Epidemiological data indicates that a large proportion of the global population is going to experience at least one neurological disorder in their lifetime. Therefore, Ienca stated that there is a moral obligation to accelerate technological innovation in the context of clinical neurotechnology, leveraging the full power that AI can bring to various domains of scientific enterprise. AI has the potential to improve neuroscience research, provide more accurate diagnoses, provide personalised therapy, and embed AI in neural interfaces. Ienca stressed that we must remove unnecessary obstacles preventing the translation of these technologies and the delivery of much-needed solutions worldwide.

However, some classical ethical considerations need to be addressed. The first one is privacy. Ienca discussed “neuro privacy,” the risk of revealing sensitive information through retrospective data mining. This can include the identification of digital biomarkers that are predictive of neurological characteristics. For example, AI models can use digital phenotyping and smartphone behaviour to identify dementia. This could potentially be abused by employers or healthcare insurance providers, leading to new forms of discrimination based on neurological features. In addition, in the last 20 years, there has been significant debate regarding brain reading, which is the possibility of extracting privacy-sensitive information from neural data processing. Moreover, this debate has been revamped in recent months with numerous editorials published exploring the topic due to AI and deep learning models proving extremely effective at reconstructing visual and semantic content from neural activity. This reverse inference AI technology is needed clinically because, for example, it can power speech neuroprosthesis to treat disorders like aphasia; but if this technology is abused, it could lead to more significant violations of privacy.

The final point that Ienca focused on was the problem of equitable access; it is essential that AI technology is used in clinical neuroscience in a way that democratises the technology and does not amplify existing inequalities. Ienca posed the issue of AI being used in the neurotech field, not to restore function in people with a disorder, but to augment and enhance human capabilities above normality. This could lead to novel forms of inequality at the cognitive level.

PERSPECTIVES ON THE INTEGRATION OF AI IN NEUROLOGY

Philippe Ryvlin, University Hospital of Lausanne, Switzerland, concluded the session by discussing the current state and future of AI integration in clinical neurology. Ryvlin had the opportunity to participate in the organisation of the first-of-its-kind international conferences dedicated to AI in epilepsy and neurological disorders hosted in the USA. From these conferences, Ryvlin emphasised that Europe is lagging behind the USA in the development of AI tools and integration into the healthcare system. Europe must acknowledge this divergence and its potential impact on patient care.

A key focus of Ryvlin’s presentation explored the advances of AI in diagnostics, stating that the largest impact of AI in clinical practice will be in this field. Already, AI tools are used to detect abnormalities and interpret neuroimaging automatically. Specifically, this has been demonstrated by recent validation of an electroencephalogram (EEG)-based algorithm that can classify EEGs as normal or abnormal. This will help physicians review EEG and neuroimaging faster, reducing the rate of mis-abnormalities. By aiding neurologists in making more accurate diagnoses, less invasive testing will be required, and treatments will be guided more accurately.

Ryvlin discussed a paper published in 2023 by a research group at Google (Mountain View, California, USA) that compared their large language model Med-PaLM against clinicians on several diagnostic tasks.2 The study found that when clinicians and Med-PaLM were tasked with answering medical questions, there was an almost equal performance (Med-PaLM 92.6% verses clinician 92.9%) based on scientific and clinical consensus.2 Although the model had a higher likelihood of causing potential harm (2.3% compared to clinicians’ 1.3%), Ryvlin suggested that current optimised models might perform even better. He acknowledged the inherent risks but stressed the significant potential benefits of AI in augmenting clinicaldecision-making.

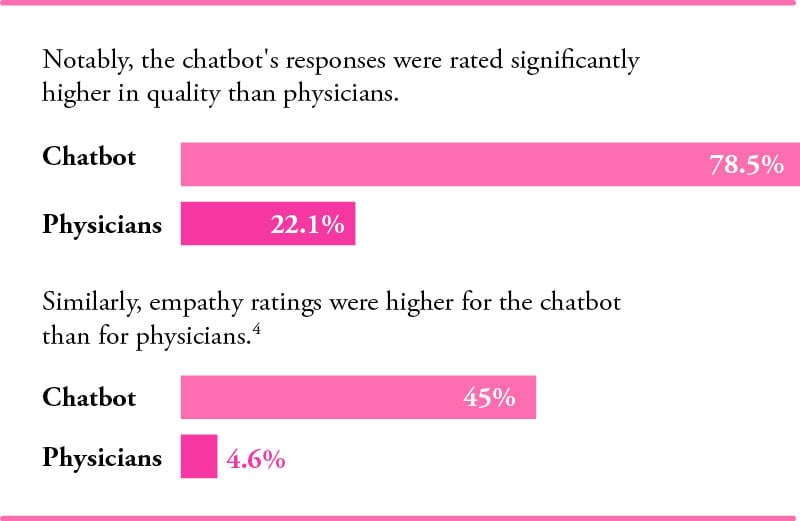

Ryvlin also referenced a recent publication where an academic group tested ChatGPT-4’s accuracy in diagnosing complex clinical cases.3 The study revealed that ChatGPT-4 correctly diagnosed 57% of cases, outperforming 99.8% of expert physicians.3 This finding underscores the potential of AI to enhance diagnostic accuracy significantly. In addition, Ryvlin discussed results from a recent study that compared physician and AI chatbot responses to patient queries on a public social media forum. Participants rated the quality and empathy of responses without knowing the source.4 Notably, the chatbot’s responses were rated significantly higher in quality (78.5%) than physicians (22.1%). Similarly, empathy ratings were higher for the chatbot (45%) than for physicians (4.6%).4 Ryvlin connected this to the potential use of AI in improving the soft skills of medical trainees, suggesting that AI could help identify and enhance empathetic communication. However, Ryvlin cautioned against allowing technology to fully replace human clinicians, highlighting the complexity of integrating AI into healthcare systems to protect individuals’ employment and ensure patient interaction with healthcare professionals.

Concluding his presentation, Ryvlin stressed the transformative potential of AI in clinical neurology, urging the European medical community to develop and adapt its own AI solutions to avoid becoming overly dependent on costly technologies governed by aggressive businesses and non-European entities.

CONCLUSION

The session at EAN 2024 highlighted both the promise and the challenges of integrating AI into clinical neurology. While AI has the potential to revolutionise patient care, enhance diagnostic accuracy, and improve medical education, these benefits must be weighed against the ethical concerns and risks of inequality. The session underscored the importance of developing robust regulatory frameworks to ensure that AI technologies are safe, equitable, and used to augment rather than replace human expertise in neurology. As Europe navigates this complex landscape, it must strive to lead in AI innovation to ensure that these technologies are harnessed for the benefit of all.